Data Synthesis and Deep Neural Networks

What do you do when you do not have enough data to train your deep learning model?

Collecting training data and properly labelling it is crucial for any machine learning project. It is probably the most expensive step too. If we do not correctly set up the foundation of further research, the entire effort could go wasted.

Until 2015, virtually everyone in the computer vision community was skeptical of the value of synthesized RGB data. The reasoning was centered around the assumption that the deep learning models can quickly pick up on the high-frequency artifacts of the synthesis process, and essentially learn to cheat their way to perfect accuracy.

I remember at the time I was playing Grand Theft Auto V on my GPU heavy research machine (I did it after hours).

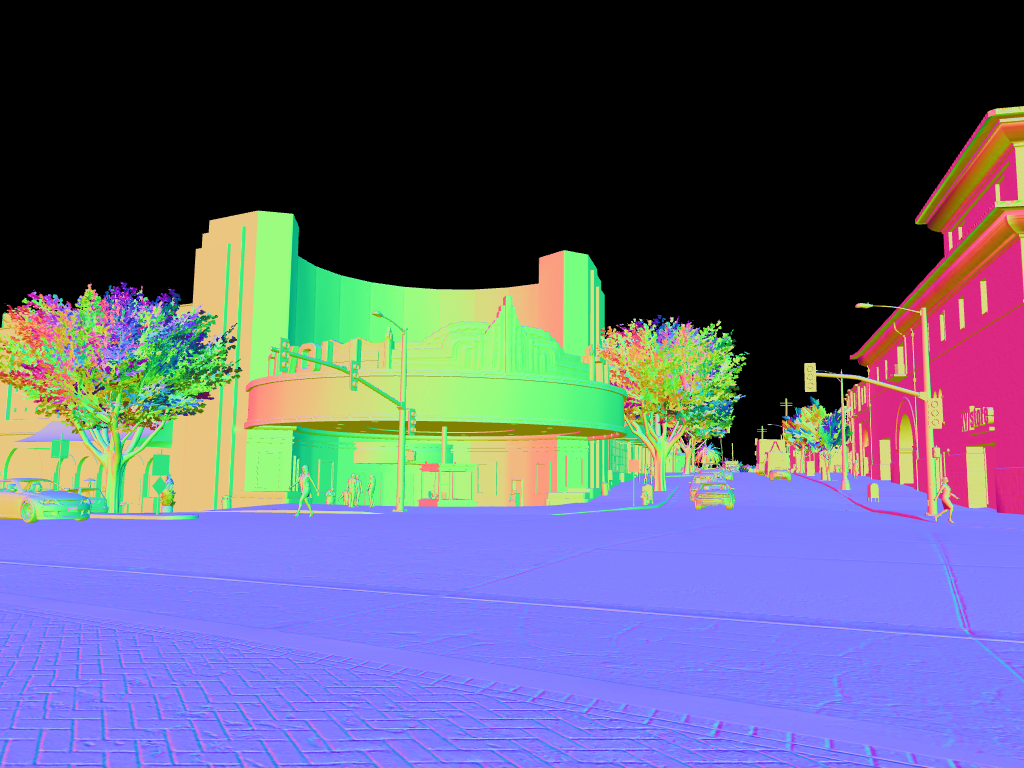

The game world seemed so realistic that at one point, I became curious whether we could extract some training data from the video game. The beautiful thing about synthetic data is that since you generate the data, you already have the groundtruth information for every scene. That essentially means near-zero cost to collect data.

Challenge #1: How can we extract training data from GTA V?

I had no idea how to go about this challenge. I knew, in principle, it was possible to create a middleware library to intercept all the DirectX/OpenGL function calls and collect data in the process, but not having any experience doing 3d rendering, I wasn’t sure what is the first step.

And then I had a lucky encounter with a blog post on GTA V - Graphics Study that directly lead me to Renderdoc, an open-source tool that allows you to collect GPU data in real-time.

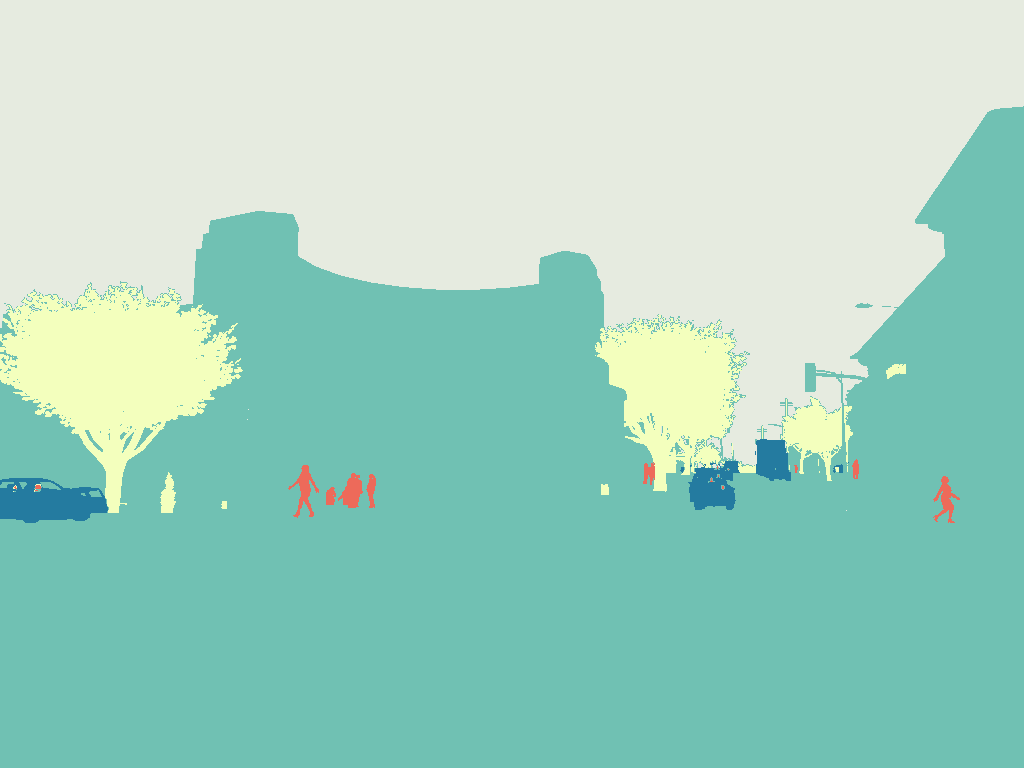

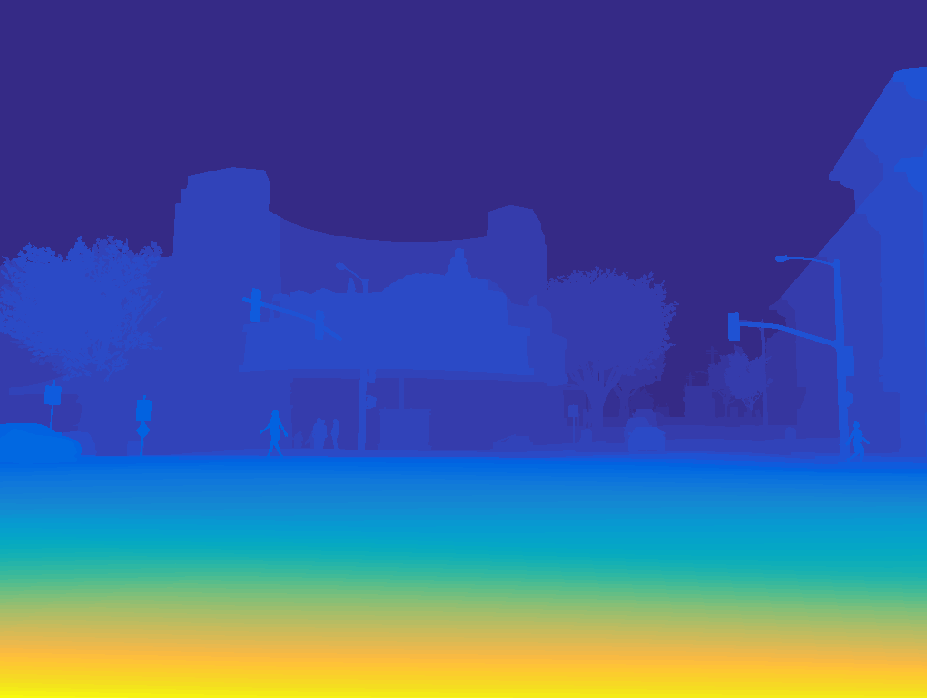

After a few days of hacking and customization, I was able to pull out some internal buffers from the video game.

Challenge #2: How to automate data collection?

I had written a modification over Renderdoc that would collect and store specific buffers of the current frame on disk on the press of a key. But the next question was, how do we automate the data collection process? At first, I tried driving around the game and capturing frames at regular intervals, but that quickly became a tiresome task. I then allowed my friends to play GTA V (during the regular hours!) to capture the data. I soon realized that this was not going to scale. I then had a second lucky encounter with the modding community. Luckily, GTA V has one of the most active modding communities on the internet. I was able to find a few open-source mods that communicated with the internal gaming engine that allowed you to trigger specific actions within the game (as if you were God).

Now I could change seasons, time of the day, the weather, traffic levels, etc. with a simple function call.

One of the exciting functions that I found in the game was setting the character on autopilot driving mode. I guess this function must have been used internally when a cut scene switched to playing mode. This was perfect. I could simply let the character drive on autopilot overnight, and another process regularly captures the frames.

I remember I walked in the next day and saw that my character had died! So I sat down and reviewed the captured frames. In the video game, I realized that other drivers may get angry at you for no reason and start shooting at you. Since I was not around to protect my character, he would die and start again at the hospital. After searching through all the internal game functions, I eventually found a way to keep my character alive. But I wasn’t done then. The automation still didn’t work because the other drivers would pull my character out of the car. After a few more hours of searching, I found another function to lock the car doors, and now it worked! Over a few weeks, I captured over 60,000 frames from the video game. Now the actual scientific research could begin, which resulted in my first Ph.D. paper:

Play and Learn: Using Video Games to Train Computer Vision Models.

Shafaei, Alireza, Little, James J., and Schmidt, Mark. In BMVC 2016.

PDF arXiv:1608.01745 - MIT Technology Review

We showed that contrary to the popular assumption that synthetic data is not good enough (at the time), we can actually train computer vision models on synthetic data to get them to recognize the real world. We discovered that the most effective way to train computer vision models is to first pre-train them on the synthetic data and then fine-tune the models on real data.

It’s been over six years now, and using synthetic data in the training of deep neural networks is a thing! When I was writing my thesis and did a retrospective look at the field, there were more than 20 synthetic datasets and at least five companies whose work was to syntehsize data for your research. But, of course, the publisher of the GTA games, Take-Two Interactive, was not particularly happy about the new interest (and chances of more scrutiny) in the game. So at some point, they sent out cease-and-desist letters to some of the researchers who were developing the tools to pull out training data [link]. The field is very active in this area now. Check out the most recent follow-up work I have seen from the Google Research team, Kubric.

The takeaway lesson from this post is that you should look for ways to synthesize data and use them for training deep learning models. It will be a very effective regularizer, at least, especially if you have limited access to labelled real-world data.